Google I/O is the tech giant’s annual developer conference, recently they had Google I/O ’17.

The main basis of the entire I/O was in fact Machine Learning.

We already know that Google is heavily involved in ML and its umbrella term AI. Google’s main motto is Organising the world’s information; and Sundar says it’s by applying deep computer science to solve problems at scale.

Computing is Evolving:

There’s a shift from mobile to AI first computing. As the CEO says, Google is going for a AI first world, and is investing heavily on AI and in acquiring AI and Robotics start-ups. They hire the best minds in the ML field such as Geoffrey Hinton, Yoshua Bengio and much more. ( Almost all top tech companies hire PhD’s in ML, Such as Yann LeCun as Facebook AI Head(Your Image Recognition is bound to be accurate and fast when the one who found CNNs is working as your AI head)).

Google Apps involving ML:

Google Photos – Launched two years back at I/O, uses machine learning to automatically recognise people and categorise photos and locations. We can also perform contextual search using google photos.

The above image shows where Google is using ML, YouTube Recommendations, Duo low -bbandwidth calling, Contextual Search in Photos, HDR+ Photos and so on. They’ve got their plates full.

Now, Like Google Assistant and Allo, Gmail comes with Smart Reply too. The ML Systems used learn to be conversational with the user.

Google’s AI research:

From touch we’re moving on to two important modalities of the future: Voice recognition and Computer Vision

Speech Recognition

Google’s speech recognition error rate has significantly reduced over the years. It’s the state of the art. That’s why your phone jumps even when a YouTube video says “OK, Google”

4.9% Do you understand what that means? Damn, son.

Google’s production cost went down as Neural Beam Forming, a signal processing technique applied neural-ly. It reduced the number of sensors required to detect everyone in a room from 6-8 to just 2 in Google Home. It also increased the support for number of people to 6 using Deep Learning

Computer Vision

Google Photos understands the above picture. It comes to a conclusion that it was the child’s birthday party and that he was happy.

Google’s Computer Vision is better than Human Recognition! For real.

You may argue that Humans can identify different categories than ImageNet competitions and that Humans can get the meaning or value of the picture is another discussion by itself.

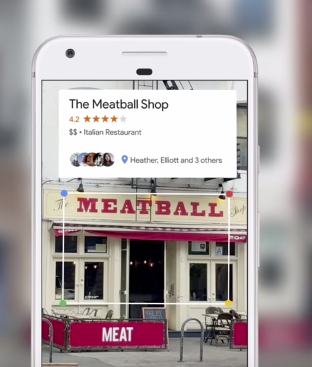

Google Lens: According to Sundar, Google Lens is a set of vision based computing capabilities that can understand what you’re looking at and help you make decision using that information.

Some Samples: ( They will be shipped to newer Pixel devices and Google Assistants)

(Come on! This one was just cool. )

AI First data centres and TPUs.

Google’s looking for a complete makeover of their data centres in light of the AI Push. Computing power is one of the most important things in machine learning. Training large data sets takes power and time.

Tensor Processing Units – Launched Last year -> Custom made processor for intensive machine learning applications.

Performance: 15-30x Faster; 30-80% Power efficient than CPUs and GPUs at that time.

Every time you use a google product like google search, you use TPU. It also powered AlphaGo’s win against Lee Se-dol.

The TPU’s were optimized for inference/prediction earlier, whereas training is the more computationally expensive part. Each week, the GPUs at Google used to train 3 Billion samples in over 300 GPUs.

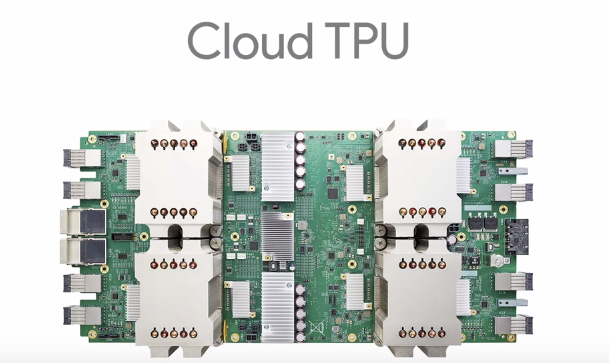

Tadaa–aa. New Generation of TPUs

The above picture is one cloud TPU board which has 4 chips.

Performance measures( One board) :

180 Trillion Floating point operations per Second.

64 TPUs make 1 TPU POD –> 1 Killer Super Computer

1 TPU POD ==> Capable of 11.5 Peta Flops.

It is indeed the technical advancement in the hardware side which will improve training phase. It’s not named Cloud TPU for giggles. Users can access TPUs for their own machine learning solutions. This along with NVIDIA’s computing power makes training much less difficult moving forward.

Google will basically focus on three things, researching better machine learning models which is a long process involving ML big shots. Tools such as TPUs, and Apps.

The .ai team has been working on pathology, DNA analysis for identifying genetic diseases, molecule analysis, auto draw which became popular weeks back and so forth.

One of the things which Google’s doing is Auto-ML which is a reinforcement learning based deep neural network which selects the best input neural network model using the TPUs.

Pretty dope stuff. Neural Nets building Neural Nets If this reaches state of the art accuracy, It kinda means researchers don’t have jobs? I don’t know. AI raises such questions of morality and ethics. What If all of us are out of jobs because of far superior, much competent machines? What if Google is indeed Skynet?What if? What if the rise of AI leads to the doom of Mankind.

That’s it for now.

Thanks for reading.